Providers of high risk AI systems must comply with safety, transparency and accountability requirements, but a lot of non-techie professionals I’ve talked to, who are being asked to assess the unique risks posed by integrating AI into their systems and workflows, feel overwhelmed or unprepared.

What is a high risk AI system?

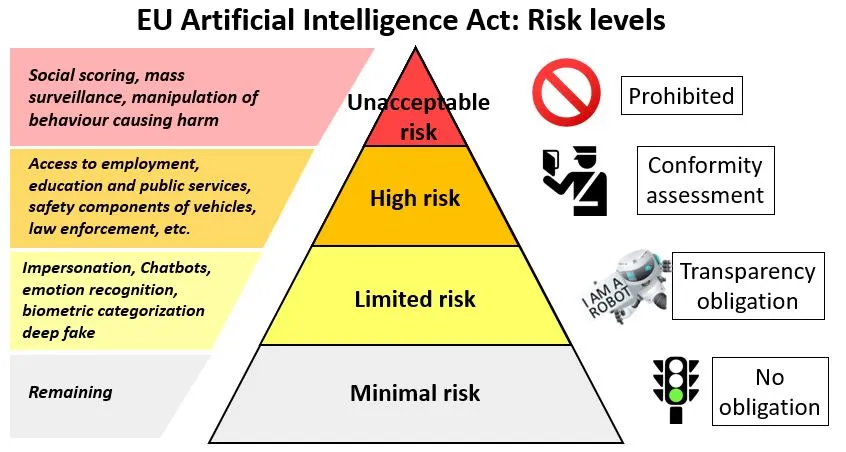

The EU AI Act categorises AI systems based on risk levels: unacceptable risk, high risk, limited risk and low or minimal risk.

Determining the risk level involves considering the impact on safety, fundamental rights and societal impact. If the AI system has the potential to cause harm, for example medical diagnosis devices and autonomous vehicles, they would fall into the high risk category on the basis of impact on public safety. If the AI system has the potential to violate our rights to privacy or could be potentially discriminatory, such as tools used for hiring or educational assessments and grading then it is deemed high risk. If the AI system affects many people or critical services, for example credit scoring or critical infrastructure management, then it is deemed high risk.

How can I navigate the complex world of AI regulation and compliance?

I had the pleasure of collaborating with a company specialising in AI risk and compliance, on a month-long series of semi-structured interviews and workshops to assess the state of knowledge, existing controls, AI use cases, monitoring and assessment mechanisms, relevant standards, regulations, and approval processes within a global enterprise who were keen to learn more about AI safety.

We found that while existing controls for data governance, anonymisation, security, consent, and compliance with GDPR and industry-specific standards were in place, additional measures were required for their high-risk AI systems. These included regular monitoring and auditing of AI systems, high quality data sets, making AI outputs understandable and interpretable and supporting human oversight.

Transparency

Interpretable models allow the people who are overseeing AI systems, to understand how AI decisions are made and to identify potential sources of unfairness. To match GOV.uk’s content design guidance, explanations must be pitched to an appropriate reading age, so we recommended replacing SHAP force plots with simple narratives to explain how factors push decisions one way or another.

Human Oversight

Human oversight is a requirement for high risk AI. This doesn’t mean that there must be a person involved at every step of the decision-making process, although some interpret the requirement as such. The idea is that people verify and validate the inputs, outputs and functioning of the AI system to ensure accuracy, fairness, and reliability. People also assess the system’s performance against predefined metrics and benchmarks and intervene when necessary to correct errors, mitigate biases or address unexpected outcomes. Knowing that people are actively involved in overseeing AI systems instills trust and confidence.

I know of one company who employed a data analyst to visually check through daily logs of prompts and responses to search for breaches in data security and misuse.

But this doesn’t seem efficient or scalable.

To address concerns about the efficiency and scalability of manual oversight processes we proposed benchmarking models, creating appropriate metrics and measures for continuous monitoring and architecting simple UI patterns that enabled users to quickly identify issues with AI system performance, in alignment with their existing risk assessment and governance processes.

This way, decision authority ultimately rests with a person. Our clear visualisations facilitates informed judgements and assessments by empowering decision-makers with an understanding of system behaviours, how and why this behaviour may change over time and the foresight to identify potential issues.

If you would like to know more, please get in touch!